By Ifeoma Ben, LLM, MBA

In Nigeria, the digital revolution has significantly transformed communication, enabling individuals to express opinions, share information, and mobilize communities through social media platforms. However, this surge in digital interaction presents complex challenges in balancing the protection of free speech with the need to regulate harmful content.

Regulatory Frameworks and Initiatives

The Nigerian government has implemented several measures to address content moderation. The National Information Technology Development Agency (NITDA) introduced the Code of Practice for Interactive Computer Service Platforms, aiming to enhance proactive content moderation and curb misinformation. This code encourages platforms to employ advanced technologies and strategies to identify and mitigate the spread of false information.

Additionally, the Cybercrimes Act of 2015 (As Amended) criminalises the use of the internet for terrorist purposes and prohibits the dissemination of various types of harmful content. This legislation serves as a framework for preventing and prosecuting cybercrimes, reflecting Nigeria’s commitment to maintaining a safe online environment.

Challenges in Content Moderation

Despite these regulatory efforts, significant challenges persist. A notable case involved Meta’s Oversight Board raising concerns about the company’s failure to promptly remove a viral video on Facebook that threatened LGBTQ+ individuals in Nigeria. The video remained on the platform for five months, highlighting deficiencies in content moderation, particularly in detecting and understanding local languages and contexts.

Furthermore, the Nigerian government’s attempts to regulate social media have sparked debates about freedom of expression. The proposed Anti-Social Media Bill faced significant opposition from civil society organizations and human rights activists, who argued that it could suppress free speech and undermine democratic principles.

The Path Forward

Balancing content moderation with the protection of free speech requires a nuanced approach. It is essential to develop laws that establish human-rights protective rules around unlawful online content and activity. Careful definitions of terms like “hate speech” are crucial to ensure that only speech warranting legal restriction is targeted, thereby safeguarding freedom of expression.

Moreover, social media platforms must invest in improving their content moderation systems, particularly in understanding local languages and cultural contexts. This includes employing advanced technologies and increasing human oversight to effectively identify and manage harmful content without encroaching on individual rights.

In conclusion, as Nigeria navigates the complexities of content moderation in the digital age, a collaborative approach involving the government, social media platforms, civil society, and users is vital. By working together, it is possible to create a digital environment that upholds free speech while protecting individuals and communities from harm.

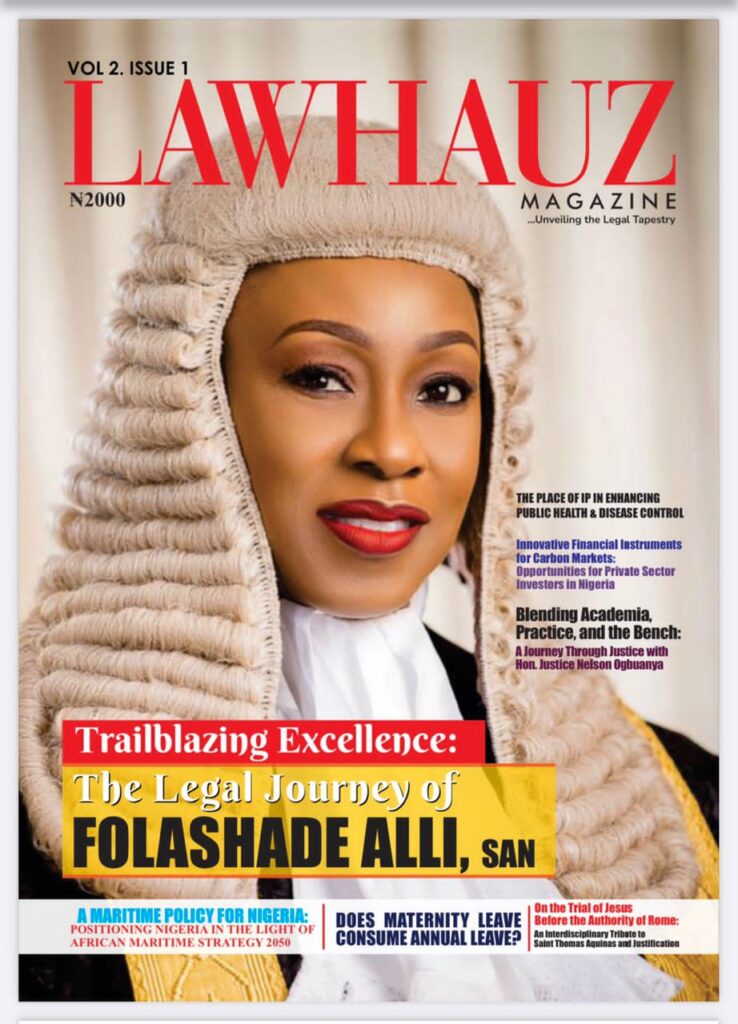

Ifeoma Ben is a Partner at The Law Suite and the Editor-in-Chief of Lawhauz Magazine and can be reached on 08033754299